Fozia Aman

Consultant

Blog

A nationwide low-stakes accountability program in Tanzania improved learning outcomes but came at a cost of higher student dropouts.

Despite the appeal to improve school performance through strengthened accountability, there is concern that such efforts could distort behavior if the stakes are high: schools and teachers could teach to the test, neglect unrewarded activities, or simply cheat. As a result, a number of countries (e.g., Brazil, Chile, Colombia, Mexico, and Pakistan) have turned to low-stakes accountability such as publicizing information, or report cards, about school performance. Tanzania’s Big Results Now (BRN) School Ranking initiative was a similar effort designed to revamp the accountability system of the country. However, a recent RISE working paper shows the program had mixed effects.

This study contributes to our understanding of accountability by showing that low stakes accountability can work, but even low stakes efforts can have unintended consequences. It suggests that public information programs need careful design to avoid perverse outcomes while producing positive results.

Improving accountability is a critical focus of the RISE Programme’s work on understanding how to improve learning outcomes. In recent decades, education systems have typically focused on accountability for schooling, with systems measuring and holding officials accountable for the proportion of children enrolled in school. As attention turns to addressing the learning crisis, with many children in school but not learning, accountability structures need to reorient around achieving learning.

The government of Tanzania rolled out the Big Results Now Education program in 2012 as part of a larger flagship government reform to address the deteriorating quality of education in the country. The School Ranking Initiative ranked schools at the district and national level based on the average score of students on the Primary School Leaving Examination (PSLE). Rankings were directly shared with schools through District Education Officers (DEOs) and made publicly available using websites. The intervention had backing from the central government to reinforce implementation. The program designers expected that publicizing information about school performance would induce better outcomes by raising the pressure parents and local bureaucrats exert on schools.

The researchers hypothesized that schools ranked in the bottom of their district would face higher pressure to improve exam performance, compared to schools ranked in the middle. To test this, they estimated the difference in exam performance between those ranked in the middle and bottom of their districts, and then compared this gap in the periods before and after the start of BRN. The result is a measure of how much schools in the bottom of the ranking improved relative to those in the middle of the ranking.

To examine a broader range of policy impacts, the study also assessed other exam-related school performance measures—pass rate, number of passed students, and number of exam-sitters.

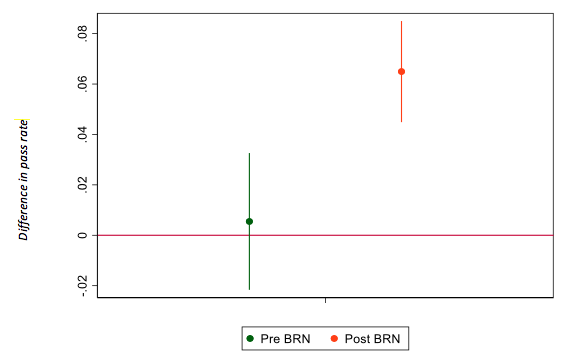

The authors found that schools ranked at the bottom of their district indeed improved more than those in the middle. The dots in Figure 1 capture the difference in the average pass rate between those ranked at the bottom and middle of their districts, after controlling for past performance and district trends. The results show that during the Post-BRN period, schools initially ranked at the bottom decile of the district increased their pass rate by 6 percentage points in the subsequent year relative to schools in middle deciles. While those in the middle decile may also have improved, the bottom decile improved (on average) by six percentage points more. In contrast, there was no difference in the pre-BRN period. The study also found a significant increase in the absolute number of students who passed. The reform led to an average of 1.8 additional exam passers per school—a 21 percent increase relative to the pre-BRN period.

Figure 1: The difference in average pass rates between schools ranked at the bottom and middle of their districts

Troublingly, the study also found a significant decrease in enrollment and in absolute number of test takers in schools ranked at the bottom of their district during the same period. While this finding may suggest that schools have artificially boosted their performance by excluding low performing students from sitting the exam, the increase in the absolute number of students that passed the PSLE indicates that schools did in fact improve performance of at least some students. All the same, the reduction in number of test takers is persuasive evidence that BRN-induced pressures led some low performing schools to strategically exclude some students from sitting the PSLE.

The team also examined various mechanisms through which performance improvements could have been achieved. To this end, the researchers examined various hypotheses that could have driven the result.

It’s not resources re-allocation. Given the considerable discretion that the District Education Officers (DEOs) have over human- and physical-resource decisions of schools, one concern was that the results were driven by DEOs taking resources away from decent schools and re-allocating them to the worst-performers. If that was the case, the improvements in poor performing schools could have come at the expense of other schools. Making use of detailed survey data, the authors found that there was no evidence of resource re-allocation towards the schools at the bottom.

It’s not about the parents. Although information about school ranking was made available on the national website, survey data collected for the study confirmed minimal parental awareness of the district ranking which ruled out parental pressure as the source of change.

It might be top-down pressure by local bureaucrats. The reform placed substantial pressure on DEOs to meet certain targets for exam performance in their district, and they fed this pressure down to the schools. For example, DEOs held annual meetings with all the head teachers in their district to discuss their exam performance. In turn, head teachers who care about their reputation and career progression faced stronger incentives to demonstrate their competence through good exam performance. Although the analysis was not able to conclusively prove or disprove this mechanism, this is the most plausible interpretation of the results.

This study provides an encouraging story about the potential effectiveness of a low-stake accountability system for driving school performance through top-down bureaucratic and reputational pressure. But the negative consequences of the reform also serve as a cautionary tale for policy-makers who are considering similar intervention: the risks of teachers and schools “gaming” incentive schemes do not disappear when the stakes are low. Policy-makers should consider these potential distortions when designing programs: for example, rewarding both the average performance of a school and student transition rates.

Finally, the study contributes to the evidence on the effectiveness of nationwide accountability reforms. Accountability systems for learning are more complex than those for schooling. As countries look to improve their learning outcomes, including through reforming their accountability systems, this study sheds light on one approach that can contribute to this goal.

RISE blog posts and podcasts reflect the views of the authors and do not necessarily represent the views of the organisation or our funders.