Marla Spivack

World Bank

Blog

Anyone who follows RISE closely (or just knows what the acronym stands for) will know that we are focused on “systems” of education. Our focus on systems might seem obvious to some, but to others it might be puzzling. The systems focus can sometimes feel abstract, even to those of us who work on the programme.

That’s why I was excited to read chapter 6 of Brian Levy’s new book on governance of South Africa’s education system. This chapter “Explaining the Western Cape performance paradox: an econometric analysis” by Gabrielle Wills, Debra Shepherd, and Janeli Kotze, provides a concrete, compelling measurement of the importance of education of systems—relative to inputs to education like funding per-student, teacher qualifications, or school infrastructure—in determining student outcomes.

When comparing students in the Western Cape to students in the Eastern Cape, attending school in the Western Cape is the single biggest determinant of student learning—even after statistically adjusting for all the observable ways the students and schools are different. Roughly, the Western Cape schools produce literacy rates a full standard deviation higher (an effect size of 1) even with the same students and with the same inputs. This is a large, meaningful difference. An effect size of 1 is roughly what separates the learning outcomes in Indonesia from those of the OECD.

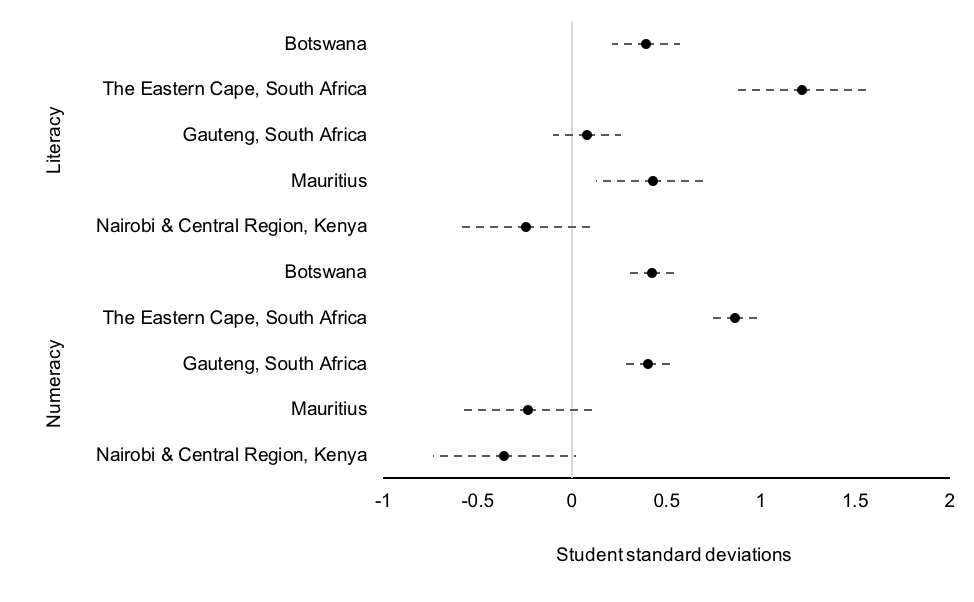

This strongly suggests that there is something about the “system” in the Western Cape as compared to Eastern Cape that is a critical determinant of learning. The authors also compare students in the Western Cape to students in other South African provinces and in other countries. In these comparisons, they find that “the Western Cape systems effect” is often important, but not always positive, reflecting the fact that the Western Cape’s education system is stronger than some comparators and weaker than others.

Wills et al. use the Southern Africa Consortium for Monitoring Educational Quality (SACMEQ) data, a regionally representative (in South Africa) survey that tests children’s literacy and numeracy as well as a range of indicators of student, teacher, and school characteristics to separate out the effects of the “education system” from the effects of other schooling inputs.

To do this the authors use propensity scores to weight observations, creating groups of students in a range of geographies, including Botswana, Gauteng province, the Eastern Cape, Nairobi and Central province of Kenya, and Mauritius, which are nearly equivalent to a group of students in the Western Cape. The authors then use a simple linear regression to measure the relationship between a comprehensive set of observable inputs to schooling and student’s literacy and numeracy (measured, in the usual way, in standard deviations to reveal “effect sizes”).

In addition to all observable education inputs (including community characteristics, school characteristics, teacher characteristics, and student characteristics) they add an indicator for whether the school is in the Western Cape or another geography.

If the propensity weights Wills et al. assigned created equivalent groups of students across each pair of geographies, and if the more than three dozen observable characteristics they included in their regression fully characterize the inputs to schooling, then we can interpret the coefficient on the Western Cape indicator as a measure of the “Western Cape effect”—the difference in student learning outcomes accounted for just by a student attending a school in the Western Cape as opposed to an observationally equivalent student attending an observationally equivalent school in another geography. Though we should bear in mind that all the caveats about identifying causality from observational data apply, even to this careful analysis.

The comparison is neatest between the Western and Eastern Cape, where data availability and student characteristics allowed the authors to construct the most closely comparable groups of students.

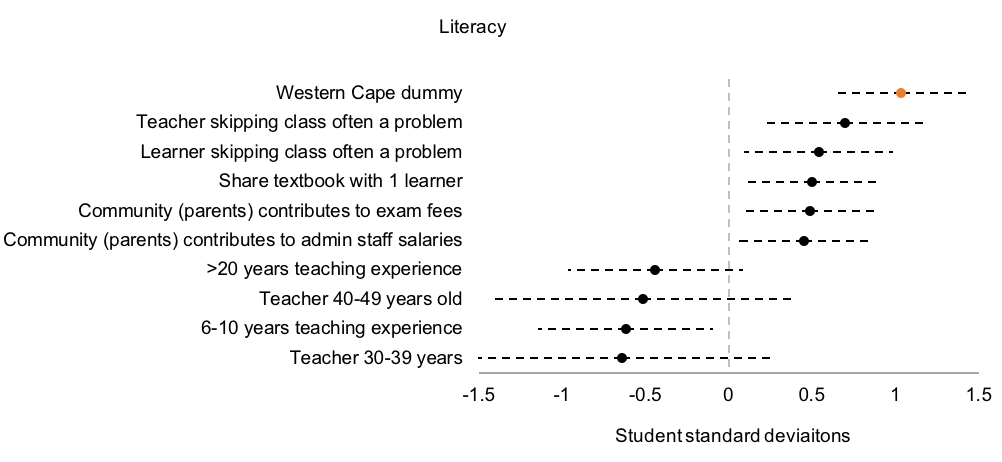

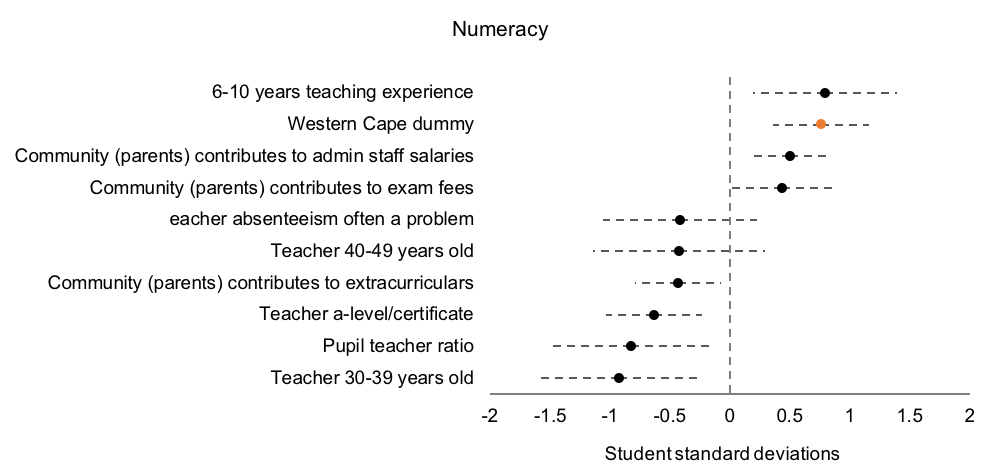

Figures 1and 2show the coefficients of the variables with the largest relationship with student literacy and numeracy in the regressions comparing the Western and Eastern Cape. Wills et al. included dozens of other observables in their regression, but I’m just showing the 10 largest here for visual clarity. On literacy, equivalent students in the Western Cape do 1.04 standard deviations better than students in Eastern Cape; on numeracy, they do .75 standard deviations better.

Note the magnitude of the relationship between being in the Western Cape and student outcomes, relative to other observable inputs to education. For literacy, it’s the covariate with the largest relationship, for numeracy it’s the covariate with the second strongest relationship. It appears that being in the Western Cape does more to improve student’s outcomes than many of the other measurable inputs to schooling, or measurable immutable student characteristics that we know affect learning.

When the authors compare the Western Cape to other provinces and countries, they find more mixed results (see Figure 3). “The Western Cape effect” is negative in some case and positive in others, ranges from half to less than a tenth of a student standard deviation, and isn’t statistically significant in several cases. The negative Western Cape effect in the comparison to Nairobi and Central Kenya and Mauritius could reflect the fact that those education systems are stronger than the Western Capes, though we should interpret these results cautiously as they are not statistically significant. Data availability constraints and more pronounced differences in student characteristics make the construction of equivalent groups more challenging in these comparisons, which could explain why the authors find a stronger systemic effect when comparing the Western and Eastern Cape.

There are numerous component parts of an education system that can either promote or impinge on student outcomes in complicated ways. We can think of these as the other variables Wills et al. include in their analysis. RISE calls these the “proximate determinates” of education outcomes. Vast academic and policy literatures exist examining the proximate determinants of learning, often these papers address questions of a formula: What is the effect on the average child of exposure to a set of learning conditions in a setting. Questions like, “What is the effect of teacher training on learning?”, “What is the effect of missing textbooks on learning?”, and “What is the effect of a new pedagogical approach on learning?” all follow this formula.

These questions about the proximate determinants of learning are limited in two ways. First the effects of any of these proximate determinants on children’s outcomes differ across contexts. RISE is interested in understanding the features of systems that mediate these varied effects.

Second, the features of individual components are not generated randomly. Instead proximate determinants of education outcomes are themselves a product of the conditions and constraints faced by politicians, bureaucrats, administrators, teachers, parents, and students. For instance, these proximate determinants show that “teachers skipping class are often a problem” is, not surprisingly, a large correlate of student learning. But whether or not a teacher skips class is itself an outcome of the operation of the education system.

The features that mediate differential impacts of interventions, and the conditions and constraints that produce the proximate determinants of outcomes are what RISE papers have called the “determinants of the proximate determinants” of education systems. Wills et al.’s analysis quantifies the magnitude of the relationship between the system itself on the outcomes of students in the Western and the Eastern Cape—even controlling for all observables. If one adds in the impact of the influence of the system on the “determinants of the proximate determinants” the total system impact would likely be even larger. Their findings are yet more evidence of the importance of RISE’s systems focus.

The Politics and Governance of Basic Education: a Tale of Two South African Provinces (edited by Brian Levy, Robert Cameron, Ursula Hoadley, and Vinothan Naidoo) can be downloaded for free via Oxford Scholarship Online.

RISE blog posts and podcasts reflect the views of the authors and do not necessarily represent the views of the organisation or our funders.