Felipe Barrera-Osorio

Vanderbilt University

Insight Note

Evidence matters for the effectiveness of public policies, but important informational frictions—that is, resistance to obtaining or using information on the subject at hand—sometimes prevent it from shaping policy decisions. Hjort et al. (2021) showed that reducing those frictionscan change not only political leaders’ beliefs but alsothe policies they implement. One-way information, from research to policy, may sometimes be insufficient, though. Policymakers may be agnostic about the effectiveness of an intervention, or they may not know which of its features require adjustment. A process of policy experimentation may be needed (Duflo, 2017), in which policies are rigorously evaluated at a small scale, the findings of those evaluations inform the policy design, and a new evaluation determines the effectiveness of a fine-tuned version of the intervention, with the assessment continuing until the programme is ready to be scaled up. This process requires very close collaboration among government, implementers, and researchers.

The means by which evidence is produced is also important. A frequent criticism of researcher-designed interventions is that results may not be relevant. One reason is that pilot programme’s participants or circumstances may be atypical, with the result that the experimental treatment, even if implemented with fidelity, may not achieve similar outcomes in other settings (Al Ubaydli et al., 2017; Vivalt, 2017). A second reason is that governments may lack the capability to implement with fidelity interventions tested in randomised control trials. A partnership between policymakers and researchers can help attenuate these concerns.

A recent experience in Colombia provides a good example of such a partnership at work. “Let’s All Learn to Read” is an ambitious programme to improve literacy skills among elementary schoolchildren (Grades K–5). Spearheaded by the Luker Foundation, a local non-governmental organisation, in collaboration with the Secretary of Education of Manizales (Colombia), the programme began with a systematic data collection effort in the municipality’s public primary schools to understand why students were failing to acquire the most basic academic skills. This led to several interventions over many years during which multidisciplinary teams of researchers working in close collaboration with local stakeholders and policymakers designed and evaluated different features of the programme.

The partnership has three critical members: The Luker Foundation, the Research Team, and Local Officials. The Foundation is a critical civil actor that plays a fundamental role supporting the work of the local government. It has a long-standing collaborative partnership with the Secretary of Education of Manizales. In a setting in which resources for innovation and evaluation are scarce, this partnership allows the government to leverage private resources and, at the same time, provides the flexibility and additional human capital to implement and evaluate several pilot programmes designed to foster learning in primary, secondary, and tertiary school. These innovative education initiatives, even when they are coordinated by the Luker Foundation, are always discussed and agreed with government officials. The government provides an entry point to public schools in the implementation of interventions and is key to the socialisation of the intervention with principals, teachers, and families.

In 2014, the Luker Foundation and the Inter-American Development Bank joined efforts to use large grants to finance a programme aimed at developing education strategies to improve student learning of reading and math in elementary schools. A first step was to develop a system to administer tests (EGRA and EGMA) at the beginning and end of the school year in every grade of every elementary public school. These continuous measures of student learning eventually became the basic data infrastructure that would allow researchers to understand which innovations worked and which one required adjustment.

Against this backdrop, the Luker Foundation reached out to two teams of researchers to bring to the table expertise on evaluation and on interventions in other latitudes. On one hand, the Foundation contacted Felipe Barrera-Osorio—at that moment at Harvard University, now at Vanderbilt University—with the purpose of acquiring a general overview of promising interventions in education around the world. One type of intervention, the provision of information about education to families, resonated with the work of the Foundation and the general driving force in education policy of the Secretary of Education of Manizales. In short, the local authorities were keen to position education as an important investment for the whole society. The actual design of the intervention was the product of discussions between the Foundation and the research team assembled by Barrera-Osorio at Harvard. It was a process that took into consideration both the optimal design of information programmes and the practical challenges of feasible implementation.

On the other hand, the Luker Foundation contacted a team of researchers at the Inter-American Development Bank that proposed to develop a series of interventions over the following five years to improve reading skills in the early grades. The initial goal was to change the way teachers taught early reading in first and second grade and combine this with a remediation intervention in third grade that would target students who were struggling to read so that to better prepare them for the last two grades of elementary school. To that end, the team recruited local and international experts that developed and qualitatively tested the materials on the field before launching an experimental evaluation.

Both research teams emphasised the need for a proper evaluation of the effectiveness of these interventions in order to know what to scale up and what to revise. Even though the idea of randomising which schools received the interventions was met initially with some reluctance from public officials and school principals, both teams were eventually able to implement multiple rounds of randomised experiments. It was unfeasible to immediately implement all interventions with high fidelity, so the agreement was to roll them out sequentially and, eventually, to reach all schools when the programme proved successful.

The design of the interventions was set by continuous dialogue between the research team and the Foundation. In turn, the Foundation has a strong link with the local authorities, making sure that any intervention fits accordingly with the government agenda and priorities. The result, Let’s All Learn to Read, was a holistic intervention that included three components: training and support for teachers; the design and provision of materials, tutorials, and personalised materials for children; and information for parents so they could support their children at home. All of them were rigorously evaluated with randomised control trials. The results of the last two were recently published.

One process of policy experimentation began in April 2014 with the recognition that poor information can lead to an underinvestment in education, especially among low-income families. Conversely, providing accurate information about children’s progress in school and the returns on education can enhance parents’ enrolment decisions, their interaction with schools, and the support they provide to their children. The researchers sought to raise families’ investment in their children’s education at home and strengthen their relationships with the children’s teachers and schools by designing an information intervention that would increase engagement, communication, and trust among the parents, teachers, and schools. The intervention involved giving parents one-page report cards showing their children’s performance and their positions (expressed as percentile ranks) relative to the average performance of all the students in the same grade and school.

For the experiment, more than 3,000 students in Grades 4 and 5 were randomised to receive the intervention (treatment group) or not (control group). The team telephoned parents and guardians of these students to schedule home visits, to be conducted in three parts. First, the agent would explain the objective of the study and provide consent materials. Second, the agent would administer a questionnaire, including asking the parents to state their beliefs regarding their children’s school performance. For families in the control group, the interview was the end of the visit. For the treatment group, the agent would proceed to part three by providing the appropriate report cards and explaining what they meant.

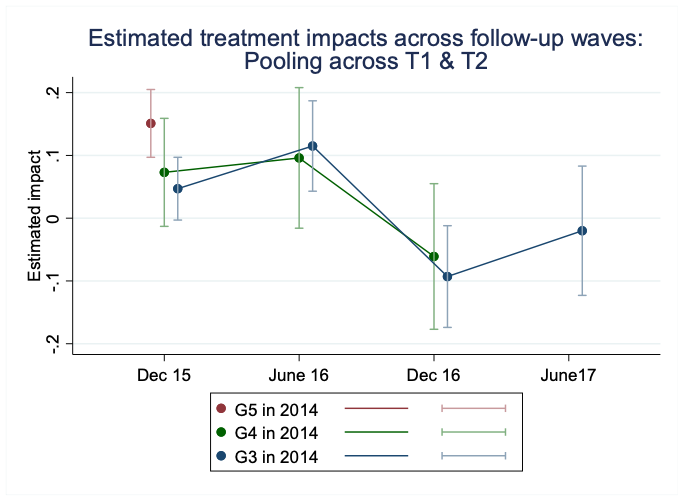

The results of the evaluation were presented by Barrera-Osorio, Gonzalez, Lagos, and Deming (2020). The intervention produced an initial pattern of small but significant short-run effects following the receipt of information on student performance and a menu of options to support parental investment (0.09 SD to 0.10 SD; see Figure 1). This suggests that parents respond to information by increasing their efforts to support their children’s education. However, the initial response decays unless new reports are available to them. The study also documented an increase in parent-teacher meetings and an update in parental beliefs about their child’s school performance, but there was no evidence they increased their investment in their children’s education at home. Another important finding was that the effects were greater for students with low baseline scores (on the order of 0.28 SD at the peak), which is consistent with these students and families having less accurate information about performance.

Another component of Let’s All Learn to Read was finetuned and evaluated by Alvarez Marinelli, Berlinski, and Busso in a 2021 study of small group tutorials for struggling readers. This component was implemented in most public schools with three consecutive cohorts of third-grade students. For each cohort, an initial literacy test was administered to identify students who were struggling to read and thus eligible to participate in the experiments. Half of the schools were then randomised into treatment and half into control groups. The research design compared eligible students in treated schools with similar eligible students in control schools.

The tutorials administered to the students in the treatment schools consisted of 40-minute sessions provided during the school day, three times a week for up to 16 weeks. The sessions were conducted in small groups of six students or less and followed a simple structure in which the tutor explained the objectives and activities of the lessons, modelled the different exercises, and then both guided the students in their work and allowed them to work independently. The curriculum was based on a phonics approach, with lessons emphasising the ability to identify and manipulate units of oral language, recognise letter symbols and the sounds they represent, and use combinations of letters that represent speech sounds; the reading of words; and reading fluency with sentences and paragraphs.

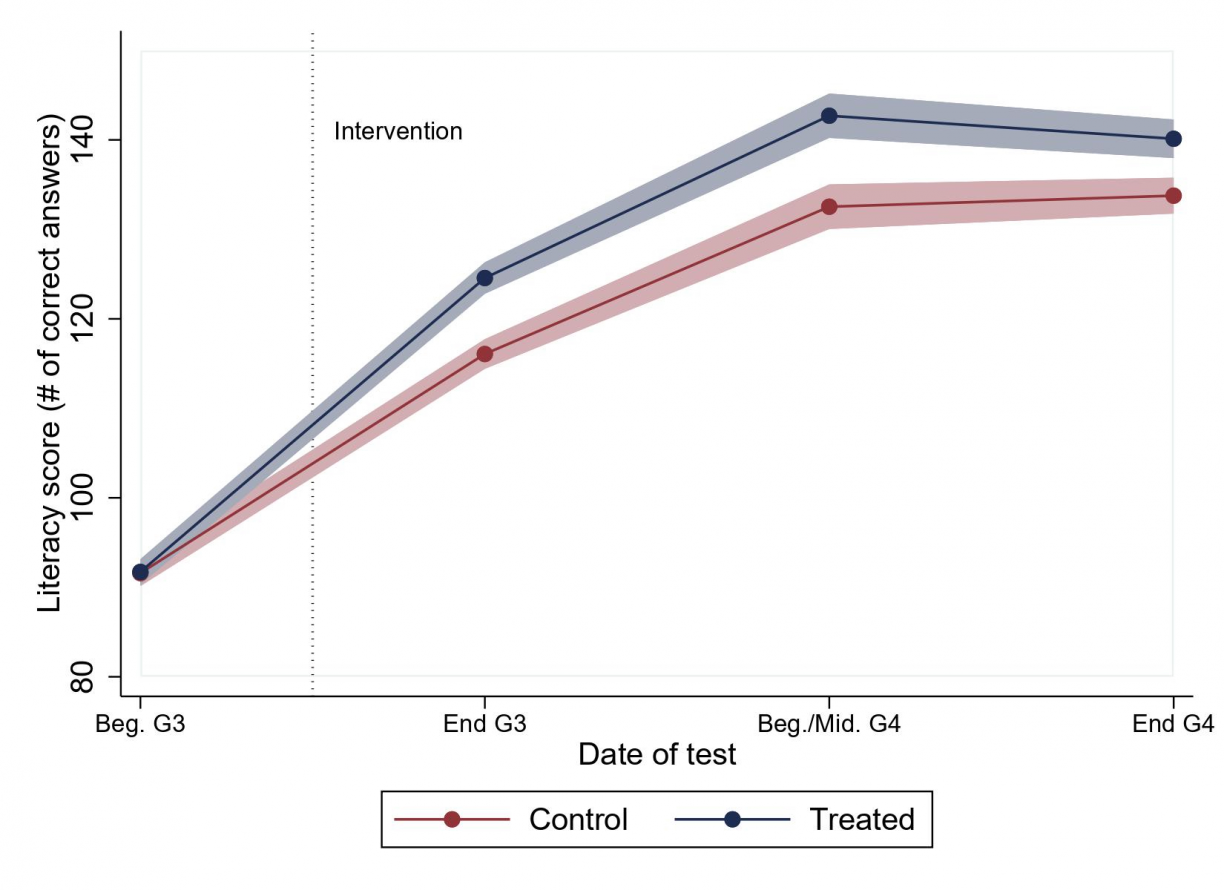

Note: The blue line shows the number of correct answers by eligible students in schools randomised to treatment. The red line shows the number of correct answers by students in schools randomised to control. Confidence intervals at the 95 % level, “Beg G3” refers to the measure taken in March (baseline) of third grade, “End G3” refers to the measure taken by the end of third grade, after treatment. “Beg./Mid G4” refers to the first measure taken in fourth grade, “End G4” refers to the measure taken by the end of fourth grade. The vertical dotted line marks the approximate time of treatment.

The intervention was effective at improving literacy skills. Immediately after the experiment finished (at the end of the third grade), the overall literacy scores of eligible students in treated schools improved by 0.270 of a standard deviation compared to those in control schools. These gains were still observed a year after the intervention ended and were homogeneous across students of differing initial ability.

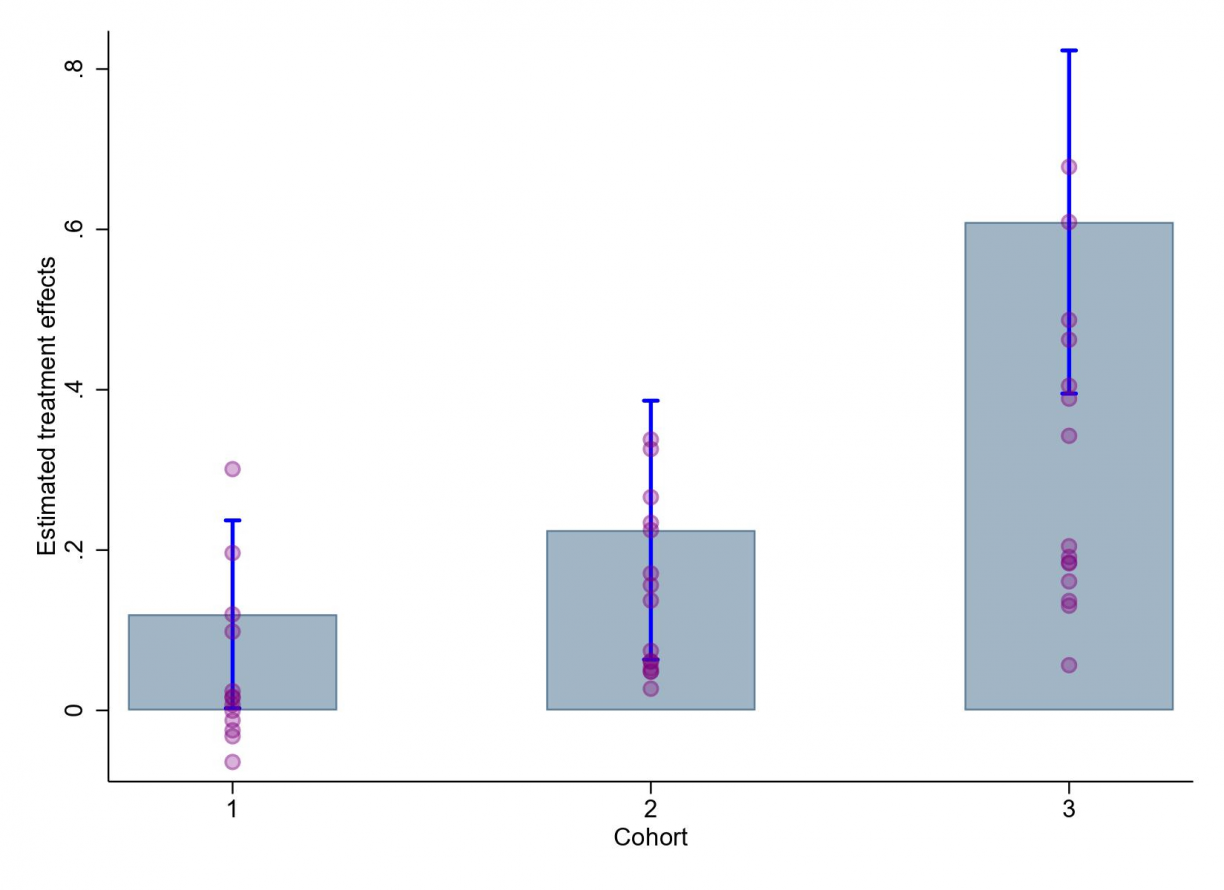

Thanks to a process of policy experimentation, the effectiveness of the intervention increased over time. The gains in literacy score by the end of the third grade grew as the programme went on, from 0.138 in cohort 1 to 0.222 in cohort 2 to 0.525 in cohort 3. In the first experimental cohort the research team found limited gains to the intervention. In conversation with the implementing partners, it was decided to make several changes to address potential factors that likely explained the limited initial success. These steps included targeting, tutorial composition, dosage, and the design of the material. By continuing experimentation with subsequent cohorts, the research team was able to show that increasing dosage (i.e., by offering more sessions and make-up sessions) and material design were important in explaining the gains we observe over time. It also showed that changes in tutorial size and composition were not important factors in explaining the success of subsequent interventions.

Note: Each bar shows the estimated treatment effect for the aggregate literacy score for each cohort. Each bar presents the corresponding 95 percent confidence interval. In addition, circles present the estimated treatment effects for each literacy subtask, estimated at each time horizon for each cohort.

The success of Let’s All Learn to Read was evident not only in its effectiveness but also in the quality of the policymaking process that ensued. Evidence-informed policy requires concerted efforts from all stakeholders, and this intervention was the product of many years of work by practitioners, policymakers, and researchers. Its realization emerged from a clear vision, the willingness to learn from successes and mistakes, and the patience to wait for results. Recently, the programme was awarded the global WISE award, given each year to the most successful and innovative projects worldwide addressing current global educational challenges.

Thanks to the high quality of the programme and its rigorous evaluation, many of the programmes’ components were expanded based on the results of the studies. In the case of the information programme, it was scaled up at the city level, reaching most students in public schools. Building on the findings of the study, the scaled-up intervention targeted lower-performing students with more frequent provision of information by using cell biweekly text messages. In the case of the small group tutorials component—as well as the teacher professional development and pedagogical materials associated with it—it has expanded in Colombia and in Panama, and it is being translated into Portuguese to be piloted in Brazil. It is planned to reach more than 1 million children by 2022.

Barrera-Osorio, F., Berlinski, S. and Busso, M. 2021. Effective Evidence-Informed Policy: A Partnership among Government, Implementers, and Researchers. RISE Insight 2021/035. https://doi.org/10.35489/BSG-RISE-RI_2021/035