Jason Silberstein

Blavatnik School of Government, University of Oxford

Blog

The findings of a new RISE working paper by Karthik Muralidharan and Abhijeet Singh are, implicitly, among the best arguments ever for why we need more systems-level thinking in education. The authors, who are co-PIs on the RISE India Country Research Team, ran a large-scale RCT evaluating a programme to strengthen school management in India at a staggering 600,000 schools (and counting) nationwide.

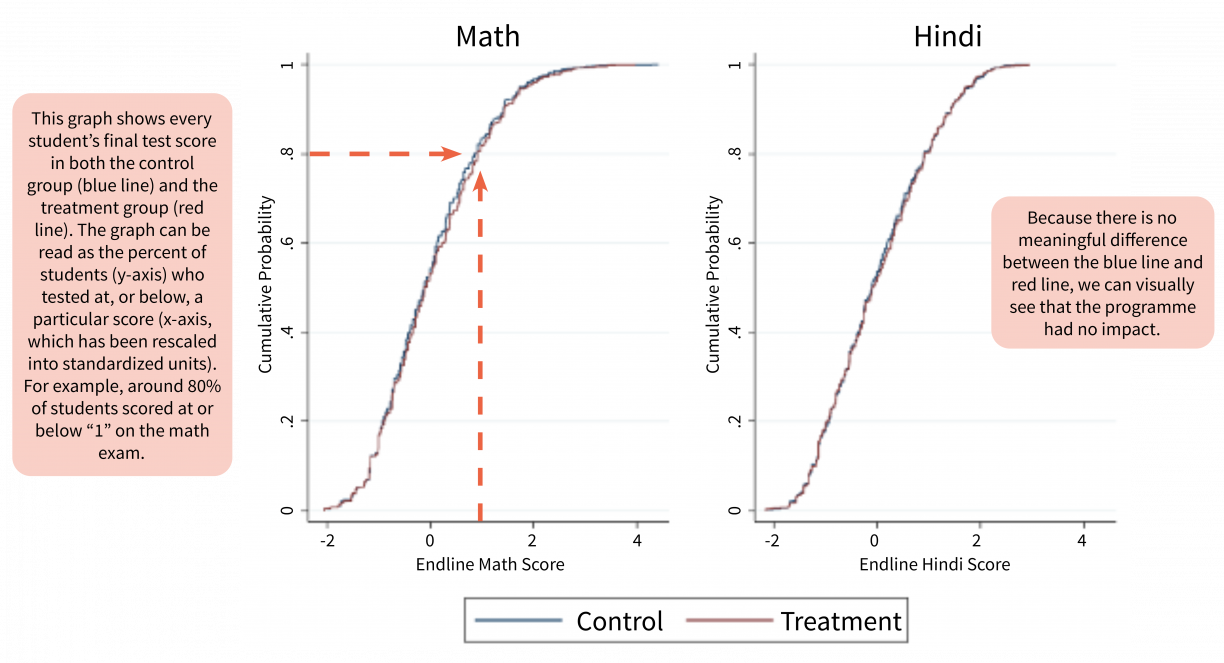

The result? No impact, whether measured through student absence, teacher absence, teaching practices, monitoring or support from bureaucrats and school committees, or student learning outcomes. As memorably summarised in a RISE conference presentation by Muralidharan, the programme evaluation reads a little like Dr. Seuss’ Green Eggs and Ham: “no impact here, no impact there, no impact anywhere”.

However, the paper does not content itself with estimating impact; it also addresses the more profound question of what caused the programme to fail. The usual culprits can be quickly ruled out: the paper repeatedly emphasises that this was a well-designed programme, and was relatively well-implemented. To diagnose the programme’s failure, the paper looks outward from the programme itself, and illuminates the mismatch between the programme and the system into which it was introduced.

In this blog, I map Muralidharan and Singh’s insights onto the RISE systems framework to isolate the parts of the system that the programme successfully changed, and the parts of the system that clashed with the programme and ultimately undermined its impact on learning. Each section of the blog describes how the programme interacted with a distinct part of the system. Figure 2 below presents the basic RISE framework, and summarises how each section of the blog fits within it.

The RISE framework divides the education system into 4 major relationships of accountability: Politics, Compact, Management, and Voice and Choice. Each relationship is, in turn, composed of different elements (information, delegation, motivation, support, and finance). If the different parts of this system are not aligned to produce learning, then the framework predicts that the system as a whole will not produce learning.

| Relationships of accountability | ||||

| Elements of each relationship | Politics: Between citizens and politicians | Compact: Between politicians and the Ministry of Education | Management: Between the Ministry of Education and schools | Voice and choice: Between communities/parents and schools |

|---|---|---|---|---|

| Information (how is progress against objectives measured) | Section 5: While the programme evaluation found no impact on learning, the programme was scaled up anyway since it met other administrative goals set by the system. | Section 1: The programme generated good information on school quality and learning. | ||

| Delegation (defining objectives) | Section 2: But the reason for collecting this information was unclear to school-level staff. | |||

| Motivation (incentives to meet objectives) | Section 3: Schools had little incentive to act on the new information. | |||

| Support (assistance to meet objectives) | Section 4: Schools had inadequate support to act on the information. | |||

| Finance (resources to meet objectives) | ||||

The paper focuses on a version of the programme that ran between 2014-2016 in nearly 2,000 public schools in the Indian state of Madhya Pradesh (MP). The MP Shaala Gunwatta (“School Quality Assurance”) programme had two big accomplishments:

The programme also envisioned a process of continuous monitoring, support, and updating of the SIPs by the CRCs, but this part of the programme was not successfully implemented (more on this in Section 4).

Overall, the parts of the programme that were successfully implemented changed the kind of information available to the Ministry of Education to measure school performance. Mapped to the RISE systems framework, the programme changed the Information element of the Management relationship (see Figure 2). Indeed, in some ways this was the Cadillac of information interventions. It produced an external, narrative account of school quality along seven domains, including domains directly linked to learning (like test scores and classroom teaching practices). This stands in contrast to the “thin” information most developing country education systems collect about schools through their EMIS systems—of which India’s extensive UDISE+ system is one example—which often results in accounting-based accountability centred around inputs.

Shaala Gunwatta mainly acted on a single element of the Ministry-school relationship—information—but had little influence on other elements. For example, the programme did not change, and in fact conflicted with, how headteachers and teachers understood their core purpose and responsibilities within the system. This is what the RISE framework refers to as the “delegation” element of the management relationship. RISE researchers have highlighted the messiness of delegation. Vague delegation can be interpreted differently across multiple levels of the system, and what is explicitly delegated to schools (i.e. the curriculum) often conflicts with what other parts of the system implicitly ask them to do (i.e. the exams they are assessed on). Variations on both these issues seem to have been true in Shaala Gunwatta’s case, leading to the diagnosis that there was incoherence between programme information and underlying delegation.

Muralidharan and Singh (2020) provide strong evidence that this type of incoherence thwarted the programme. This especially comes through in the rich qualitative interviews they conduct with frontline officials to complement their RCT survey data. These interviews illuminate a clear “disconnect between objectives of the program and how it was actually perceived by those implementing it” (p.20). Consider for example, this revealing quote from a Head Teacher (speaking about the scaled-up version of Shaala Gunwatta, known as Shaala Siddhi):

“There is so much paper work that by the time some teachers figured that out they had forgotten what was Shaala Siddhi itself” (p.18).

Whatever the programme’s de jure goals, the de facto programme goals in the eyes of frontline officials centered on process compliance. In a hypothetical system with different delegation—where the overriding expectation of teachers, schools, and lower-level officials was to increase student learning—this same information could have been used to make meaningful changes to teaching and learning. In Madhya Pradesh, where delegation focused on process rather than outcomes, the information was reduced to a paper-pushing exercise that never actually affected teaching or learning. This is the difference between making a plan in order to improve learning, and making a plan in order to make a plan.

Another probable reason the programme information had limited impact is that schools and teachers had little motivation to act on it. The motivation of school-level staff, as conceived by the RISE framework, is not limited to monetary incentives; rather, it is co-determined by a complex web of career structures such as where staff are placed, prevailing professional norms, the factors that determine career progression, and how teachers are mentored and supported. Since the programme was disconnected from any of these factors, this likely resulted in an incoherence between the information and motivation elements of the management relationship (see Figure 2).

In support of this hypothesis, Muralidharan and Singh (2020) point out that the information from the inspections and SIPs was untethered from any kind of incentives—extrinsic, intrinsic, positive, or negative—for school-level staff or CRCs (p.23). They also tease out a possible reason for this, which was that Shaala Gunwatta attempted to build an enabling atmosphere that emphasised co-operation while avoiding the concept of (top-down or horizontal) accountability entirely. There is a large literature showing that incentivising schools and teachers using “high-stakes” accountability mechanisms can badly backfire. For example, forthcoming work from the RISE Pakistan team shows that performance pay contracts attract higher value-add teachers and raise average test scores, but distort teacher pedagogy toward teaching-to-the-test. In trying to address these real problems, Shaala Gunwatta seems to have swung too far in the opposite direction. By inadequately linking information and motivation, it ended up giving implementers little reason to use the information to change what they were doing.

Another likely reason the information from the programme was not used by the system was that it was not linked to any effective mechanism for school support. Support is a fourth element of relationships in the RISE framework, and in this context describes the kind of follow-up the MP bureaucracy offered to schools to act on their SIPs. Muralidharan and Singh’s evaluation clearly demonstrates that, after the initial inspection and development of the SIP, not much happened. There was no change in the frequency, length, or kind of monitoring or support offered to schools by the key officials, the CRCs. The programme seems to have operated in one direction: while information went up, no support came back down.

The evaluation points to two potential reasons why the support element of the programme never came to fruition. First, the support role envisioned by the programme for the CRCs was inconsistent with their job and power within the bureaucracy. While the programme cast them as monitors and coaches, their actual job was largely to act as administrative pass-throughs between schools and the higher tiers of the system. Where the programme invested the CRCs with the agency to take local action and drive school-level changes, in practice the discretion for local decision-making rested with higher, block-level officials.

A second, complementary reason for the lack of support is that higher-ranking bureaucrats seem to have put little emphasis on whether or not CRCs delivered support to schools. As the evaluation keenly observes, “the program we studied worked till the point where outcomes were visible to senior officials” (p.24)—but no further. Top officials paid attention to the number of school assessments and SIPs uploaded, and these parts of the programme got done. At the same time, they did not monitor intermediate outcomes like support delivered to schools, much less the impact that support had at the school level.

A final insight from the paper on the complexity of education systems derives not from the study’s findings, but rather from its reception. The RCT element of Muralidharan and Singh’s study was conducted while the Shaala Gunwatta programme was expanding to 2,000 schools across the state of Madhya Pradesh. When the authors presented their disappointing RCT findings to the state government, the primary official reaction was to tweak the kind of paperwork produced by the programme (by, for example, increasing the complexity and administrative burden of the SIP). The government then proceeded to further scale-up a programme with no impact on learning to 25,000 total schools across the state (which Muralidharan and Sign also evaluate in their paper and also find had no impact). In parallel, a modified version of the same programme was rolled out nationally. The programme is operating in around 600,000 schools across India today, with the goal to eventually reach 1.6 million schools.

The study’s reception highlights the need to look beyond how the Ministry of Education holds schools accountable, and understand how education authorities are themselves held accountable by superordinate branches of government. The RISE framework terms this the Compact relationship. When the study introduced new information, focused on learning, into the Compact relationship, this information may have been ignored because the objective delegated to the Ministry of Education was not itself focused on learning.

| Relationships of accountability | ||||

| Elements of each relationship | Politics: Between citizens and politicians | Compact: Between politicians and the Ministry of Education | Management: Between the Ministry of Education and schools | Voice and choice: Between communities/parents and schools |

|---|---|---|---|---|

| Information (how is progress against objectives measured) | Programme evaluation (focused on learning) | |||

| Delegation (defining objectives) | Administrative view of success (not focused on learning) | |||

| Motivation (incentives to meet objectives) | ||||

| Support (assistance to meet objectives) | ||||

| Finance (resources to meet objectives) | ||||

Muralidharan and Singh provide some evidence for this in hypothesising that the compact relationship in Madhya Pradesh, and at the national level, runs on an administrative view of success. Chief, Finance, and Education Ministers prize activity on paper that—much as in the Ministry-to-school relationship—successfully mimics the outward trappings of success, even if those results are ultimately paper-thin and fail to represent real changes in schools, classrooms, or students’ learning. This explains the divergence between the programme's real success and the administrative perception of success that led the programme to scale across the country.

I recently heard an excellent metaphor to illustrate systems change from RISE Research Directorate member Luis Crouch: “wolves change rivers”. When wolves were reintroduced into Yellowstone National Park after a 70-year absence, the cascading interactions between them and the ecosystem resulted in both expected and astonishing changes. As expected, the wolves hunted deer and reduced the deer population. More unexpectedly, the mere presence of wolves drove the deer to flee areas where they were easily caught, like the area’s valleys. Without the deer, vegetation rebounded along rivers, stabilising the riverbanks and redirecting the course of rivers. Understanding the full effects of the reintroduction of wolves into Yellowstone requires a deep understanding of the many interconnected relationships that determine a wolf’s place in and impact on that ecosystem.

Focusing only on the programme, as Shaala Gunwatta and its nationally scaled-up successor did, is like focusing exclusively on the wolf. This had some expected impacts, such as producing inspection reports and school improvement plans. However, the downstream effects of any programme (i.e. changing the river’s flow) depend on how it interacts with the system it is introduced into. Shaala Gunwatta hit a dead end because the wider system was not designed to act on the information it produced in the pursuit of raising learning outcomes. Instead of zooming in on the wolf, the findings from Muralidharan and Singh (2020), and RISE as a whole, argue for zooming out and better theorising how the wolf relates to the deer, the valleys, and the wider ecosystem.

RISE blog posts and podcasts reflect the views of the authors and do not necessarily represent the views of the organisation or our funders.