Blog

CIES 2018 - What Can We Learn from Applying an Education System Diagnostic?

The Comparative and International Education Society (CIES) Conference 2018 in Mexico City kicked off with a series of fantastic pre-conference workshops which included a number of RISE researchers and affiliates making significant contributions. Laura Savage from the UK’s Department for International Development (DFID), along with Moira Faul from the University of Geneva and Raphaelle Martínez from the Global Partnership for Education (GPE) hosted a pre-conference workshop titled: “What can we learn from applying an education system diagnostic?” The workshop featured RISE Research Director Lant Pritchett as a panellist, along with Dr. Mmantsetsa Marope, the Director of the UNESCO International Bureau of Education (IBE), and Koffi Segniagbeto of the International Institute of Education Planning (IIEP) Pole de Dakar.

The workshop started with the participants developing a “word cloud” based on the question: What do you think a system diagnostic should tell you? “Gaps” featured as one of the key words in the cloud as the exercise laid the foundation for the rest of the session.

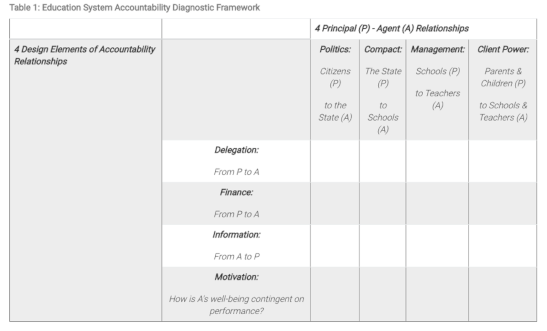

Subsequently, the panellists were invited to describe different system diagnostic tools used for education. Lant Pritchett began by explaining the RISE 4x4 model (pictured below and described in detail in the RISE Working Paper 15/005) which has been used by the RISE research teams. Since systems consist of actors, Pritchett explained that any system diagnostic should describe who the actors are in the system, what their decisions are based on, and why they make the decisions they do. The RISE 4x4 focuses on characterising the system actors, identifying the key relationships in the system, and analysing the components of those relationships.

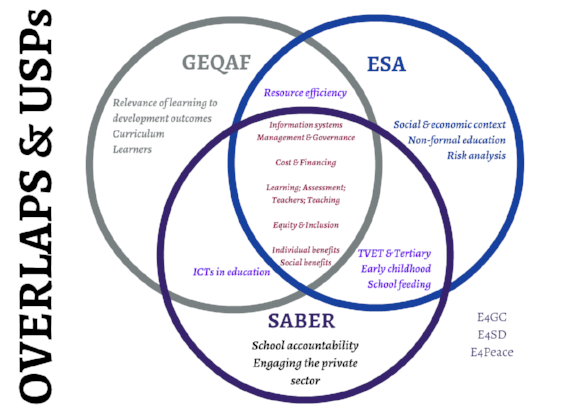

Dr. Mmantsetsa Marope gave a rich description of the General Education System Quality Analysis/Diagnosis Framework (GEQAF). She explained how GEQAF is a comprehensive tool (containing 15 analytical tools), which looks at all parts of the education system. Marope stated that GEQAF focuses on the critical constraints that impedes the (education) system from what it should do and allows the stakeholder to think of targeted intervention. GEQAF works with the managers of the education system to close the disconnect between analytical knowledge and the actual management of the system. Marope described the end game of GEQAF to be institutionalising the culture of a lifelong learning system.

Koffi Segniagbeto spoke about the methodological guidelines for Education Sector Analysis (ESA). Segniagbeto described how ESA methods enable relevant stakeholders to carry out a comprehensive analysis of the education sector in developing countries. ESA places governments at the centre of the process and promotes reforms. The guidelines focus on analysing the output, products, management, and resources, and to what extent the resources are being utilised.

Discussions following the panel presentations emphasised the importance of looking at education through the “lens of a system” rather than the “lens of a sector.” Marope pointed out that the impact of education goes beyond the sector, into economic development of countries and development of ethics in those countries et al., hence, there is a need to take a systems approach where the Ministry of Education becomes an executing agent, rather than taking a sector approach and focusing on the traditional “executor.” Referring to the RISE 4x4, Pritchett stated that management is not just about the Ministry, as there might be a lack of coherence in the system which may be causing problems. Dr. Alec Gershberg, from the University of Pennsylvania (RISE team member) and one of the participants of the workshop, distinguished between the systems and sector by stating that a “system analysis” deals with power and politics, where as the literature and discourse around “sector analysis” do not use these terms or discuss power and politics.

The second half of the workshop had participants breaking out into small groups to discuss some of the system diagnostic tools such as SABER, GEQAF, and ESA. The breakout groups mapped these tools under the broad heading of “Teachers and Teaching”, “Students Assessment”, and “Equity and Inclusion.” The breakout groups generated discussions about the purpose, potential, and ownership of diagnostic tools to better understand education systems.

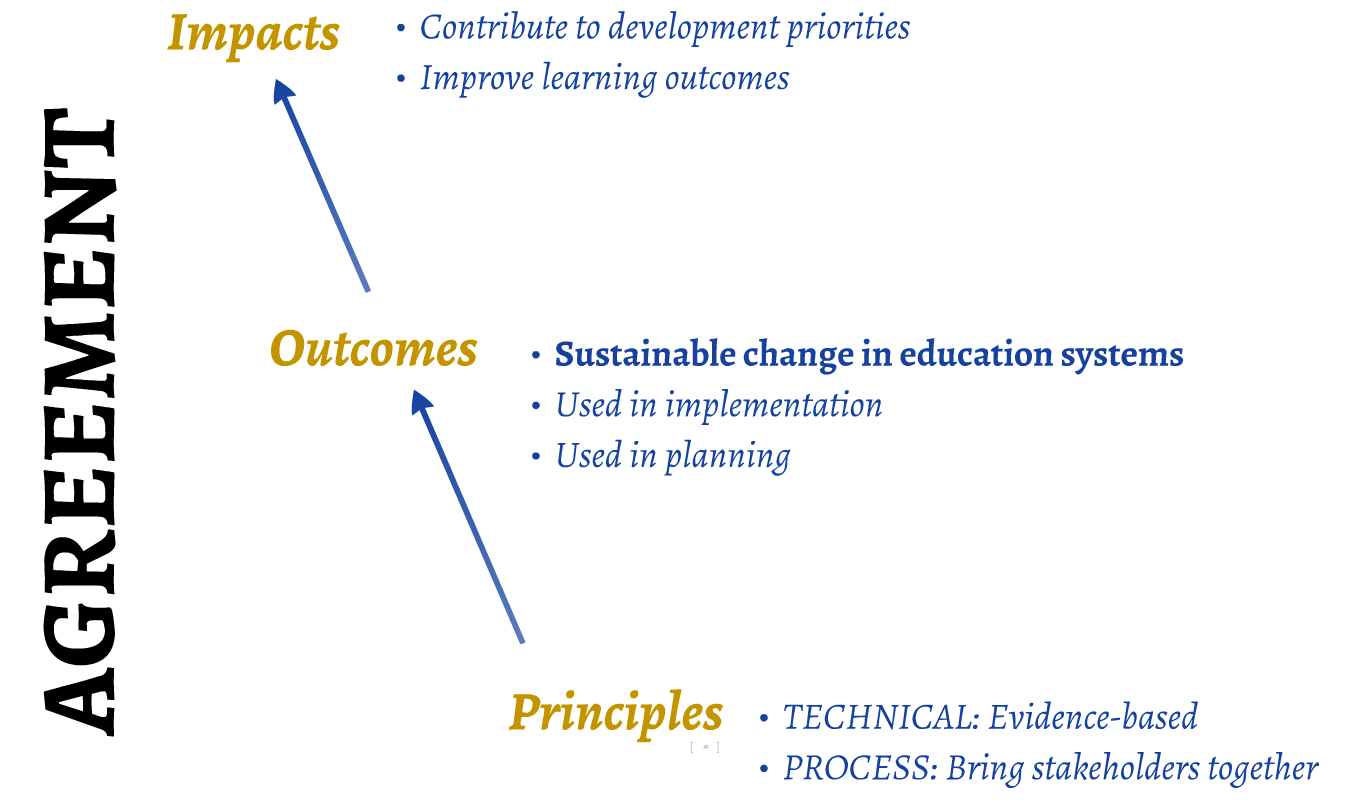

Echoing some of the discussions during the workshop, Moira Faul presented her work which consisted of interviewing actors that are involved in creating different system diagnostic tools. She pointed out that there is broad agreement on the purpose of these tools, but there are various bottlenecks, including the technical aspect (evidence) and the process aspect (bringing different stakeholders together and working on building consensus) that need to work well for significant improvement of education systems.

In her closing remarks, Laura Savage stated that there are a number of different interpretations of systems and diagnostics; what they do and what they are supposed to do. Education researchers still have a lot of work ahead of them to reach consensus about system diagnostics in the same way as the health and agriculture sectors. We need better understanding and agreement on how we define a system diagnostic. We need a comprehensive common framework to identify problems and to fully understand relationships within education systems if we are to solve the “learning crisis.”

Author bios:

RISE blog posts and podcasts reflect the views of the authors and do not necessarily represent the views of the organisation or our funders.